AI Music Ensemble Technology

AI Musician that Synchronizes with the Player

Many pieces are intended to be performed by multiple people in a music ensemble. It can be unsatisfying to play only one part of such ensemble pieces by yourself, such as when you are practicing alone or it’s difficult to get together with your fellow performers. You will have more fun playing your instrument every day if you have someone to play with you anytime, anywhere. At Yamaha, we are developing an AI system that plays together with you as an ensemble partner.

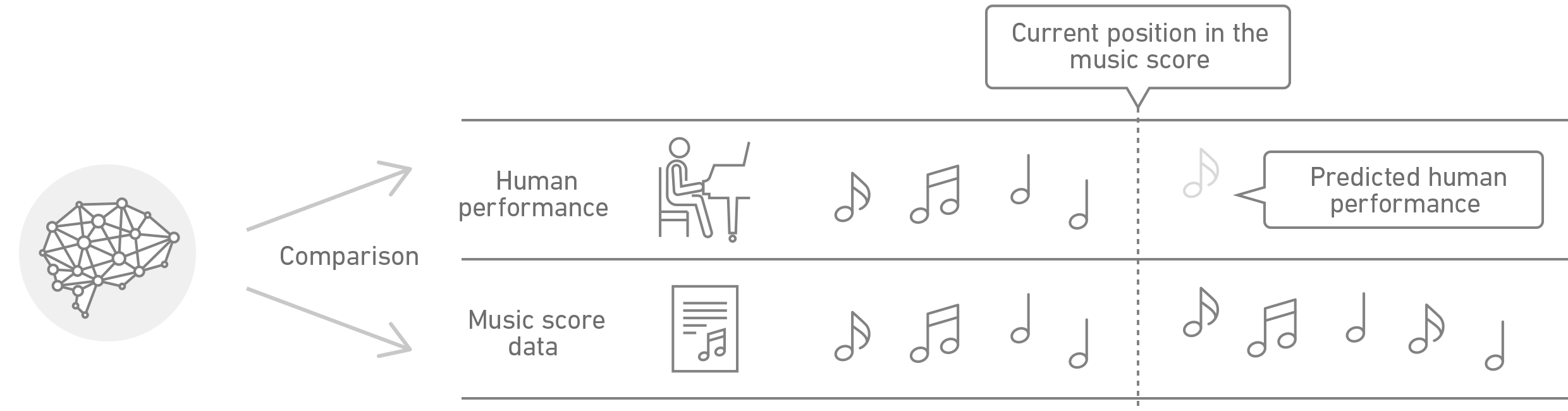

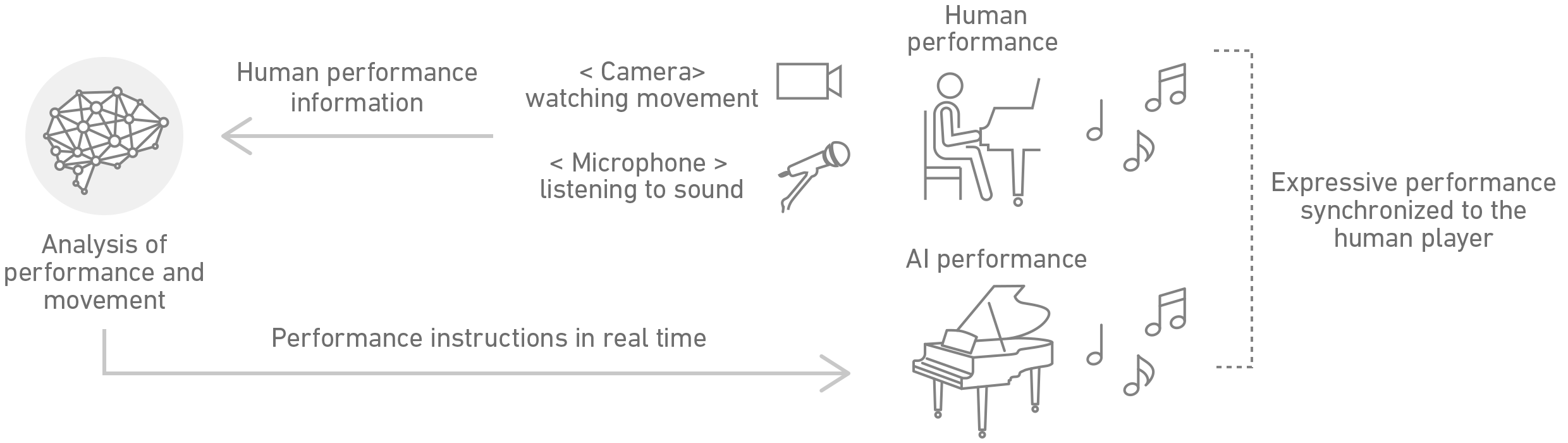

The AI compares in real time the notes you are playing to the sheet music, and analyzes which part of the sheet music you are currently playing, at what speed, and with what kind of musical expression. By predicting how the performer will play upcoming parts, the AI can play along at the performer’s timing.

This analysis can be applied to a piano, a single instrument such as a violin or a flute, a multi-person performance, or a complex performance by an orchestra. In addition to an ensemble, it can also be applied to control other pieces of equipment such as stage lighting or video playback.

How it Works

AI analyzes human performance from three main perspectives.

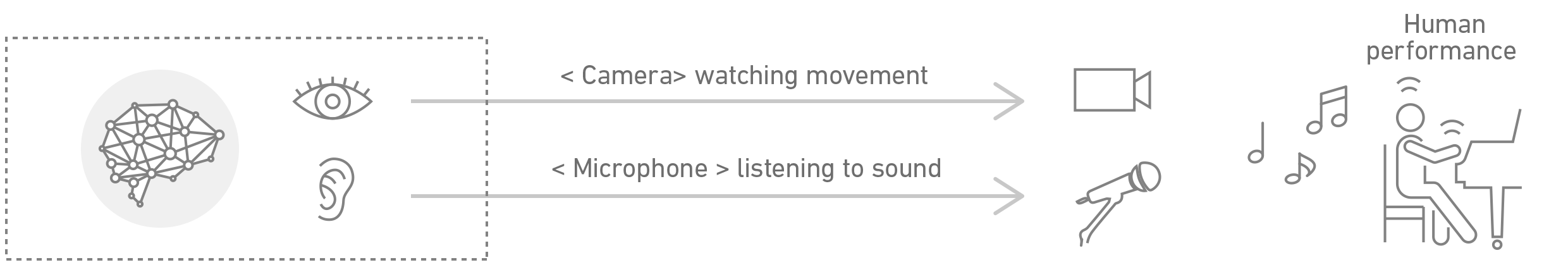

1. Using sound and motion data to analyze the person’s performance

The notes played by the performer are captured by a microphone, and the AI analyzes the various data contained in the performance. A camera can be used to capture the performer’s movements and more precisely predict the timing of the performance. It is also possible use a line input to acquire and analyze the notes being played, and to use sensors to detect movement.

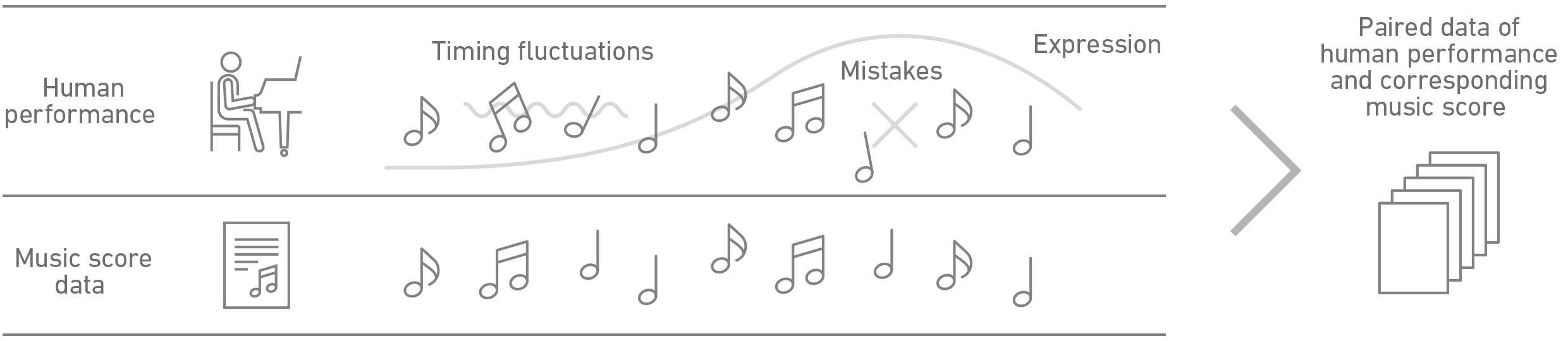

2. Predicting tempo changes, mistakes, and musical expressions in a performance

By comparing the notes played by the performer with the sheet music, the AI can infer the tempo and musical expression of the upcoming performance. When playing music, a performer expresses their emotion by varying the tempo, sometimes making mistakes and deviating from the score. It is necessary for AI to compare the performance to the sheet music while taking such human deviations into consideration. Therefore, by studying human performance and the sheet music of a variety of songs, the AI can learn the typical types of deviation that occur in human performance. This will allow the AI to play the ensemble at the appropriate timing, even if the performer changes the tempo while expressing their emotion or accidentally makes a mistake.

In addition, the AI can also recognize musical expressions in a performance, such as rousing passages or playing quietly. By learning the various expressions of the piece in advance, the AI can adapt the ensemble to the musical expression of the performer. In addition, by learning to specialize in the performance played by a specific performer, the AI can also learn that person’s unique tempo changes and tendency to make mistakes.

3. Adjusting timing

When you listen to human performers playing in an ensemble, it feels like everyone is playing at the same tempo. But in fact, the performer who is leading the tempo will change depending on the musical context of each part, and the others follow that tempo to match their timing. This exquisite timing creates an ensemble that fits the musical context.

The AI learns how to make timing adjustments that are unique to humans by studying human performance and music score data. By studying the tendencies of human performers to lead or follow, the AI can learn to match the timing very naturally for any part based on the musical context when playing in an ensemble.

AI that Influences People

When you’re performing with multiple people, you’re creating an ensemble that understands and influences each other, rather than matching unilaterally with a specific person. If AI could respond to your intentions and lead you in directions that you didn’t expect, it might make playing with the AI even more interesting and challenging. In the future, we hope to make AI better understand the intentions of performers and provide inspiration, while playing together with them in an ensemble.

Application Examples

9th Symphony for Everyone

The concert event “JOYFUL PIANO” was held at the Suntory Hall’s Blue Rose Hall on Thursday, December 21, 2023. Three people with disabilities played piano while performing Beethoven’s Symphony No. 9 with an orchestra and a choir.

In this concert event, “Daredemo Piano”, a performance assist system that utilizes AI ensemble technology, was applied to support the pianists’ performance. When a given melody is played on an automatic piano, this system assists the performance by automatically playing accompaniment on the keyboard and operating the pedals. This allows anyone, regardless of age or experience, to use the piano to express their thoughts and feelings. This concert has demonstrated that, using this technology, anyone with the passion to share music can make that dream a reality with anyone through performance.

Special Site: 9th Symphony for Everyone

Project SEKAI Piano

“Project SEKAI Piano,” which includes AI ensemble technology, was exhibited at Yamaha musical instrument stores throughout Japan from Friday, March 26 to Sunday, June 27, 2021. “Project SEKAI Piano” is an electronic piano that is based on AI ensemble technology. It has virtual singers who will sing along when the performer plays a predetermined song. For this exhibit, Hatsune Miku and Hoshino Ichika (CV: Ruriko Noguchi) were programmed to sing along to “Senbonzakura” (lyrics and music: Kurousa) and “Aoku Kakero!” (lyrics and music: Marashii). Many people enjoyed interacting with the virtual singers.

Report: Technical Cooperation with Project SEKAI Piano (Japanese)

Otomai-no-Shirabe: Transcending Time and Space

The concert “Otomai-no-Shirabe: Transcending Time and Space” was held on Thursday, May 19, 2016 at the Sogakudo Concert Hall at Tokyo University of the Arts. AI ensemble technology was used to recreate the performance of the late maestro Sviatoslav Richter, allowing him to perform along with current musicians. This made it possible for the Berliner Philharmoniker’s Scharoun Ensemble to perform with legendary pianist Sviatoslav Richter on a stage transcending time and space.

Report: Otomai-no-Shirabe: Transcending Time and Space (Japanese)

Related Items

Technical Exhibits

- 2023 9th Symphony for Everyone

- 2023 YOXO FESTIVAL 2024 / Experience a New World of Music: “JAZZ EXPERIENCE” (Japanese)

- 2022 Yokohama Sound Festival 2022 / Let’s Enjoy the Daredemo Piano: SYNC AI Session (Japanese)

- 2022 TOKYO MET SaLaD MUSIC FESTIVAL 2022 / Daredemo Piano with the Tokyo Metropolitan Symphony Orchestra®< (Japanese)/li>

- 2021 Tokyo Music Evening Yube / Violin Recital by Tatsuki Narita (Japanese)

- 2021 Project SEKAI Piano / Hatsune Miku and Hoshino Ichika sing along with your piano performance! (Japanese)

- 2019 Ars Electronica Festival 2019 / Dear Glenn

- 2019 TOKYO MET SaLaD MUSIC FESTIVAL 2019 / Music conducting simulation of Tokyo Metropolitan Symphony Orchestra (Japanese)

- 2019 Yokohama Art Action Project / Daredemo Piano Exhibit (Japanese)

- 2019 TOKYO MET SaLaD MUSIC FESTIVAL 2019 / You can improvise on Pachelbel’s Canon with Tokyo Metropolitan Symphony Orchestra and AI (Japanese)

- 2018 South by Southwest 2018 / Duet with YOO

- 2017 Sogakudo Concert at Tokyo University of the Arts / Mai-Hitenyu (Japanese)

- 2017 DIGITAL CONTENT EXPO 2017 / Music Ensemble of the Future (Japanese)

- 2016 Sogakudo Concert at Tokyo University of the Arts / Otomai-no-Shirabe: Transcending Time and Space (Japanese)

- 2014 Blue Line Tokyo Creative Exhibition

- 2014 Nikoniko Chokaigi (Japanese)

Related Movie

- Playing the piano while Hatsune Miku sings “Senbonzakura”! by Yomii [Project SEKAI Piano] (Japanese)

- TOKYO MET SaLaD MUSIC FESTIVAL 2022 / TOKYO MET SaLaD MUSIC FESTIVAL 2022: Daredemo Piano with the Tokyo Metropolitan Symphony Orchestra ® (Japanese)

- DIGITAL CONTENT EXPO 2017 / AI and Humans Performing Together: Music Ensemble of the Future (Japanese)

- AI Project YOO (Duet with YOO) (Japanese)

- Nikkei / Demonstration of performance with human violinist and AI Music Ensemble System (Japanese)

- Hatsune Miku dances with human pianist (Japanese)